Artificial Intelligence and Hidden Learning: Are “Weights” a risk?

If we still do not know exactly how artificial intelligence doses the weights in learning within the hidden layers, can we really predict its evolution?

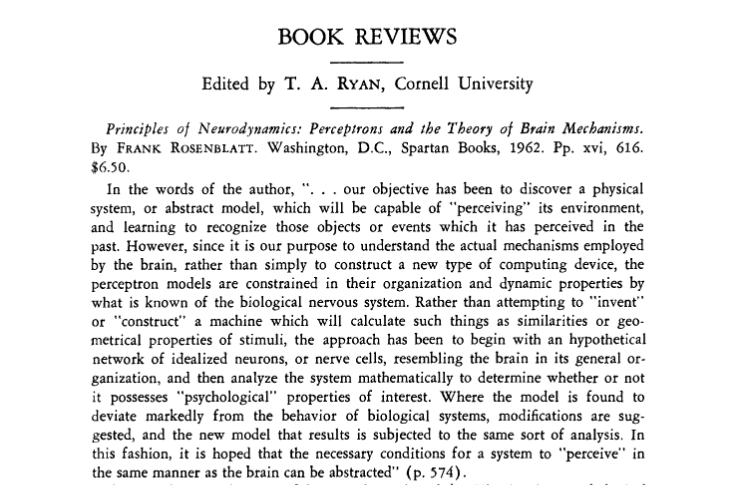

In 1962, Frank Rosenblatt (1928-1971), inventor of the perceptron, said that the goal of artificial neural network research should be "to investigate the physical structures and neurodynamic principles underlying natural intelligence. Perceptrons are the first artificial neural network models.

In the beginning there was the perceptron

In the preface to Principles of Neurodynamics (1962), Rosenblatt wrote that for him, the perceptron was not a path to artificial intelligence, but rather an investigation of the physical structures and neurodynamic principles underlying natural intelligence. Over the years, neural networks have proven to be useful and versatile, solving some problems more efficiently than other computational methods. Thus, the goal shifted from studying the nervous system to be modeled by an artificial network to making the "machines" faster and more adept at performing the tasks assigned to them. So, many have continued to study algorithms that would explain how synapses develop in the nervous system, in order to apply them to improving artificial neural networks.

Nervous system and artificial neural networks

The nervous system transmits information through electrical signals sent and received by neurons: Stimuli are received by receptors, converted into electrical impulses, processed by the network, and finally translated into action by effectors. The main components that make up a neuron are the cell body, called the soma, the dendrites, the axon, and the synapses. Synapses are the key to understanding how memory works.

An artificial neural network, on the other hand, is an algorithm that attempts to mimic the functioning of the nervous system to solve specific problems or tasks. These algorithms are made up of many units capable of performing simple tasks, called "neurons", linked together by numerous connections called "synapses". As in the human brain, in artificial networks, the structure as a whole has capabilities superior to those of a single neuron. Deep Learning (DL) and Reinforcement Learning (RL) are two categories of artificial intelligence operations and learning used to solve many problems, including image recognition, machine translation, and decision making for complex systems.

A neural network is a massive parallel processor consisting of many simple unit processors with a natural tendency to store information and make it available for use. It resembles the brain in two ways:

- Information is acquired from the environment through learning processes

- The strength of the connections between neurons, also called "synaptic weights," is used to store the acquired information.

[An introduction to Neural Computing. I. Aleksander e H. Morton. (1990)]

What are the "weights" for?

The fact is that before an artificial neural network can do anything, such as solve a problem, it has to learn how to do it. The system's learning memory lies in the "weights". These are the values assigned to the connections between neurons in the network. These values can change as the network learns, depending on the machine, the method it uses to learn, and the stimuli it receives. The weight determines how much a change in the input will affect the output. A low weight value will have no change on the input, and alternatively a higher weight value will have a more significant change on the output. Artificial neural networks solve different problems, but use more or less the same "cognitive" structure, so it is possible to use the same algorithms on different machines. "Structure modules" are blocks of neurons that know how to do a particular job; by chaining several modules together, it is possible to get a given machine to do increasingly complex tasks.

But, how do machines learn?

Supervised learning

Is basically an emulation process in which the artificial neural network is trained with inputs that simulate the problem so that the AI can calibrate the "weights" in the right way. This will be done until the difference between the input signal given to the machine and the output signal produced by the machine is minimal.

Artificial neural networks can also learn without supervision. In this case, they learn through stimuli from the environment in which they operate, in a self-organizing way. Unsupervised systems can process a self-organizing map (SOM), software capable of representing complex, non-linear relationships associated with large amounts of data into much simpler geometric relationships.

Unsupervised reinforcement learning

Uses a continuous interaction between the machine, the examples, and the actions. Each action corresponds to a reward, and the machine tries to maximize that reward over and over again. A larger reward is associated with a better action that the system takes on the environment in response to a given input. Feedback from the environment can be positive, a reward, or negative, a penalty. Learning is continuous and not data-dependent, but improves as the system operates in the real world. This is particularly interesting in robotics, where the benefits of RL are best exploited. In short, by discovering unpredictable scenarios of environmental behavior, RL enables a machine to learn by overcoming the limits of human knowledge.

Are there any risks?

The neural network contains hidden layers (located between the input and output of the algorithm) that apply transformations to the input data. It is within the nodes of the hidden layers that "weights" are applied. If we still do not know exactly how artificial intelligence doses the weights in learning within the hidden layers, can we really predict its evolution?

Insights

- W. S. McCulloch, W. H. Pitts (1943). “A logical calculus of the ideas immanent in nervous activity”, Bulletin of Mathematical Biophysics 5, 115–133.

- F. Rosenblatt (1962). “Principles of Neurodynamics”, Spartan Books.

- S. Haykin (2008), "Neural Networks and Learning Machines". New York, Pearson Education.

- I. Aleksander, H. Morton (1990). “An introduction to neural computing.”

- T. Kohonen, E. Oja, O. Simula, A. Visa e J. Kangas (1996). “Engineering applications of the self-organizing map,” Computer Science.

- D. De Paoli (2020). "Reti neurali artificiali e apprendimenti basati

sulla biofisica dei neuroni." Alma Mater Studiorum Università di Bologna. - Dong, J., & Hu, S. (1997). The progress and prospects of neural network research. Information and Control, 26(5), 360–368.

- Balcazar, J. (1997). Computational power of neural networks: A characterization in terms of Kolmogorov complexity. IEEE Transactions on Information Theory, 43(4), 1175–1183.

- J.J. Hopfield (1982). "Neural networks and physical systems with emergent collective computational abilities." Proc. Natl. Acad. Sci. U.S.A. 79, 2554–2558.

- Rupali Roy (2020). "AI, ML, and DL: How not to get them mixed!" on Medium.